Is generative AI the future of rapid and accurate chest radiograph interpretation in the ER?

In a recent study published in the journal JAMA Network Open, researchers evaluated the accuracy and quality of chest radiograph reports generated using artificial intelligence (AI) interpretations of input images and compared them with the accuracy and quality of teleradiology and in-house radiologist reports.

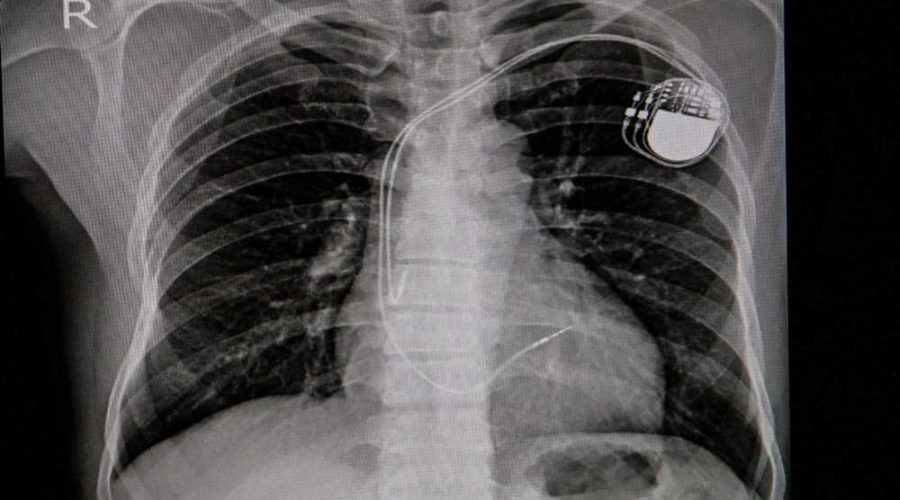

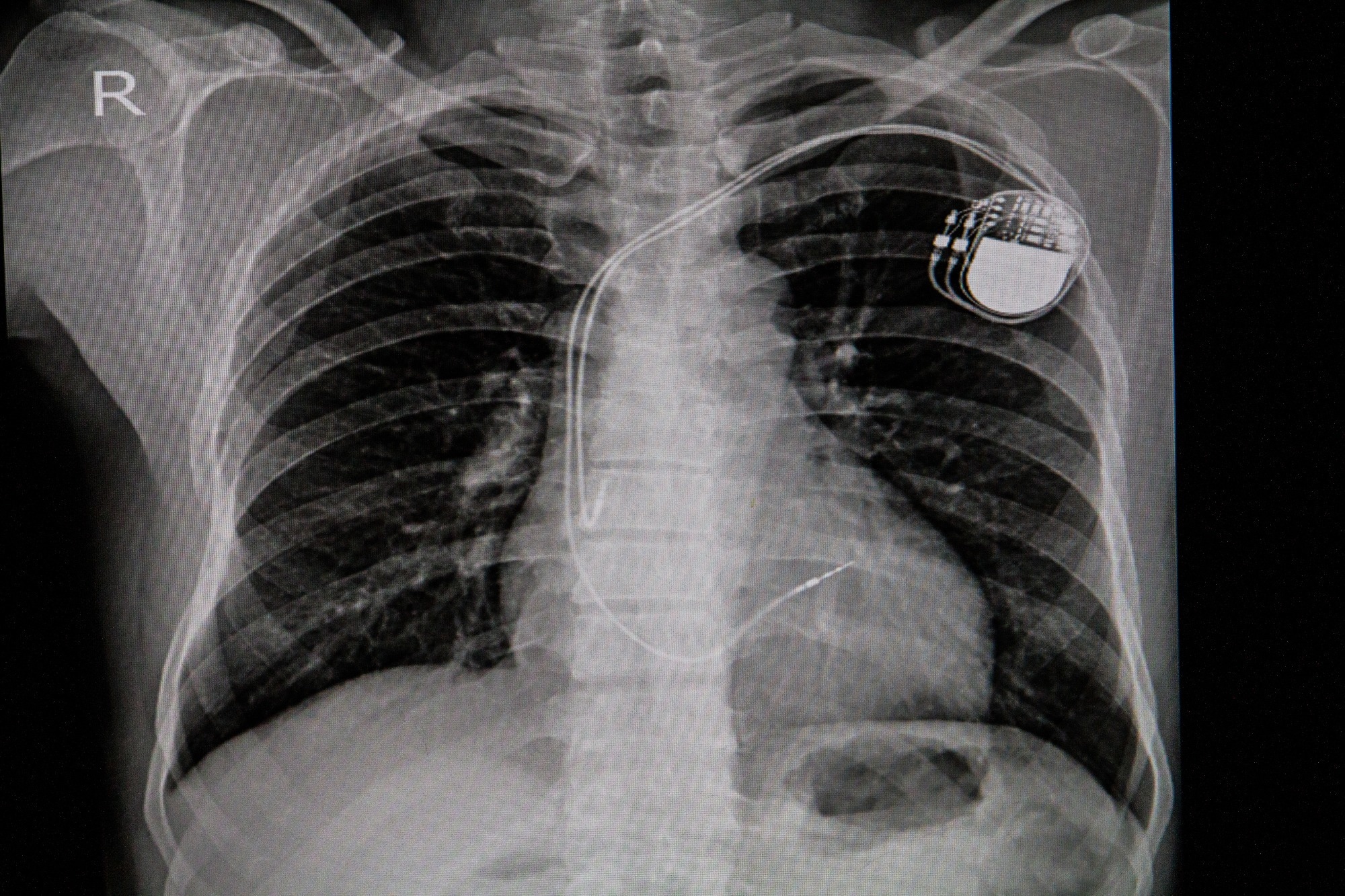

Study: Generative Artificial Intelligence for Chest Radiograph Interpretation in the Emergency Department. Image Credit: April stock / Shutterstock

Study: Generative Artificial Intelligence for Chest Radiograph Interpretation in the Emergency Department. Image Credit: April stock / Shutterstock

Background

The timely interpretation of clinical data and processing of patients is a critical factor in emergency departments. Although the discrepancy between the interpretation of chest radiographs by emergency department physicians and radiologists is low, a radiologist's immediate interpretation of these results can circumvent problems associated with alterations in treatments and callbacks for discharged patients. Furthermore, given the increase in the use of radiology in the emergency department for diagnoses, there has been increasing interest in the development of systems that can provide swift interpretation of radiographs to streamline and accelerate the processing of patients.

The lack of dedicated radiology services or 24-hour coverage of services in free-standing emergency departments is currently addressed through teleradiology services or the interpretation of radiology images by preliminary residents. These options present various challenges and possibilities of error due to factors such as limited access to complete clinical records. However, the use of AI to interpret clinical data is rapidly developing into a viable option in the medical setting.

About the study

In the present study, the team developed an AI tool for the interpretation of chest radiographs and conducted a retrospective evaluation of its performance in the emergency department setting. The tool is built on an encoder-decoder model that is transformer-based and analyzes inputs of chest radiographs to provide a radiology report as an output.

The test dataset for assessing the tool consisted of 500 anterior-posterior or posterior-anterior chest radiographs for which reports from the final radiologist and teleradiology were also available. The chest radiographs of individuals below the age of 18 or above the age of 89 were excluded. The reports obtained using the AI tool, as well as those from teleradiology and the final radiologist, were deidentified. Wherever available, prior chest radiographs were also utilized.

Six board-certified physicians, blinded to the report type, rated the AI-generated, teleradiology, and final radiologist reports using additional data, including chest radiograph images and information on the type of image acquisition. The clinical accuracy and quality of the report were rated using a five-point Likert scale. A discrepancy or error that could result in an alteration of the clinical management of the patient by the physician was considered a critical finding, and the raters were required to comment on any such discrepancies.

A cumulative link mixed model was used to compare the Likert scores between the AI-generated, teleradiology, and final radiologist reports. The primary outcome was the difference in Likert scores between the three types of reports. Secondary analyses were conducted to determine the probability of each of the report types containing any discrepancies that were clinically significant.

Results

The results suggested that the AI tool for the interpretation of chest radiographs provided radiology reports that were similar in textual quality and accuracy to final radiologist reports and were of higher textual quality than teleradiology reports. The success of the AI tool in providing accurate and high-quality radiology reports highlights its potential to aid in diagnoses and decision-making in the emergency department.

Of the 500 chest radiographs, 434 were portable anterior-posterior acquisitions, while one was upright posterior-anterior, and 65 were lateral posterior-anterior films. The most common finding was of infiltrates, followed by pulmonary edema, pleural effusions, presence of support devices, cardiomegaly, and pneumothorax. Furthermore, reports that had a score below three on the Likert scale were examined, and the discrepancies were classified as missed significant findings, extraneous findings, or improperly contextualized findings.

In one of the cases, the AI-generated report even improved on the radiologist report, with the raters noting that the radiologist report failed to detect a new infiltrate, which the AI report did. In another case, the radiologist report observed that opacities in the image were persistent, while the teleradiology report and the AI-generated report indicated that the opacities had worsened — a finding that could be of substantial clinical significance.

Compared to other tools that have used classification systems such as binary presence or absence predictions to diagnose individual pathologies, the AI tool and its use of prior and current chest radiographs also provides information on factors such as the location, severity, and clinical course of the condition. Furthermore, the AI report provides contextualized information, which can be used for differential diagnoses and as a basis for further evaluation recommendations.

Conclusions

To summarize, the study reported that the AI tool for the rapid and immediate interpretation of chest radiographs in the emergency department setting provided radiology reports with comparable levels of quality and accuracy as radiologist reports and surpassed teleradiology reports in textual quality. The AI tool's short processing time and high accuracy could potentially be used to aid and streamline the processing of patients by emergency department physicians.

- Huang, J., Neill, L., Wittbrodt, M., Melnick, D., Klug, M., Thompson, M., Bailitz, J., Loftus, T., Malik, S., Phull, A., Weston, V., Alex, H. J., & Etemadi, M. (2023). Generative Artificial Intelligence for Chest Radiograph Interpretation in the Emergency Department. JAMA Network Open, 6(10), e2336100–e2336100. https://doi.org/10.1001/jamanetworkopen.2023.36100, https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2810195

Posted in: Device / Technology News | Medical Procedure News | Medical Research News | Medical Condition News

Tags: Artificial Intelligence, Cardiomegaly, Edema, Pneumothorax, Pulmonary Edema, Radiology, Teleradiology

.jpg)

Written by

Dr. Chinta Sidharthan

Chinta Sidharthan is a writer based in Bangalore, India. Her academic background is in evolutionary biology and genetics, and she has extensive experience in scientific research, teaching, science writing, and herpetology. Chinta holds a Ph.D. in evolutionary biology from the Indian Institute of Science and is passionate about science education, writing, animals, wildlife, and conservation. For her doctoral research, she explored the origins and diversification of blindsnakes in India, as a part of which she did extensive fieldwork in the jungles of southern India. She has received the Canadian Governor General’s bronze medal and Bangalore University gold medal for academic excellence and published her research in high-impact journals.